Yesterday, we had the privilege of having to create Hive tables on top of a HBase table with avro columns. Piece of cake, we thought, since you just have to specify the schema location in Hive (since Hive 1.0, it’s actually possible to map avro schema in HBase columns to Hive columns), map it to the column in HBase and voila, it works.

Except that it just doesn’t, at least if you have a large schema definition. In our specific case, we have a XSD Schema at the root – and like all things XML, it’s quite substantial. Converted to something decent like Avro, it is still quite big. That’s when we discovered that Hive has a limit for its Serializer of 4000 chars. That limit makes sense when you consider that Hive stores its metadata in a SQL database. So after googling a bit, we found this post:

http://gbif.blogspot.de/2014/03/lots-of-columns-with-hive-and-hbase.html

which basically pointed to the SERDE_PARAMS table. And unsurprisingly, the limit there is VARCHAR(4000).

Following that advice, we updated the schema of the database using this:

alter table SERDE_PARAMS MODIFY PARAM_VALUE TEXT;

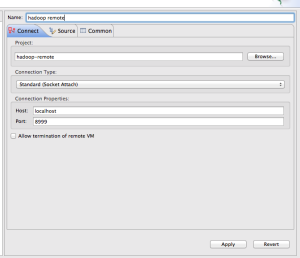

That as such isn’t enough though, because Hive was still consistently failing. Since finding out the second cause was a slightly painful experience involving remote debugging Hive Server (thanks again, Roland), without further ado, here’s the second update command:

alter table SD_PARAMS MODIFY PARAM_VALUE TEXT;

That’s also necessary, because after deserializing, Hive stores the extracted column in StorageDefinition (hence the SD in the table name), and that has the same limit.